The Missing Map For Containers In Production

In this episode, I will be running you through a bit of a crash course, on the terminology and tooling involved in running a larger production container stack. This can be a complex topic, so here is a brain dump of how I make sense of it all (map included).

Introduction

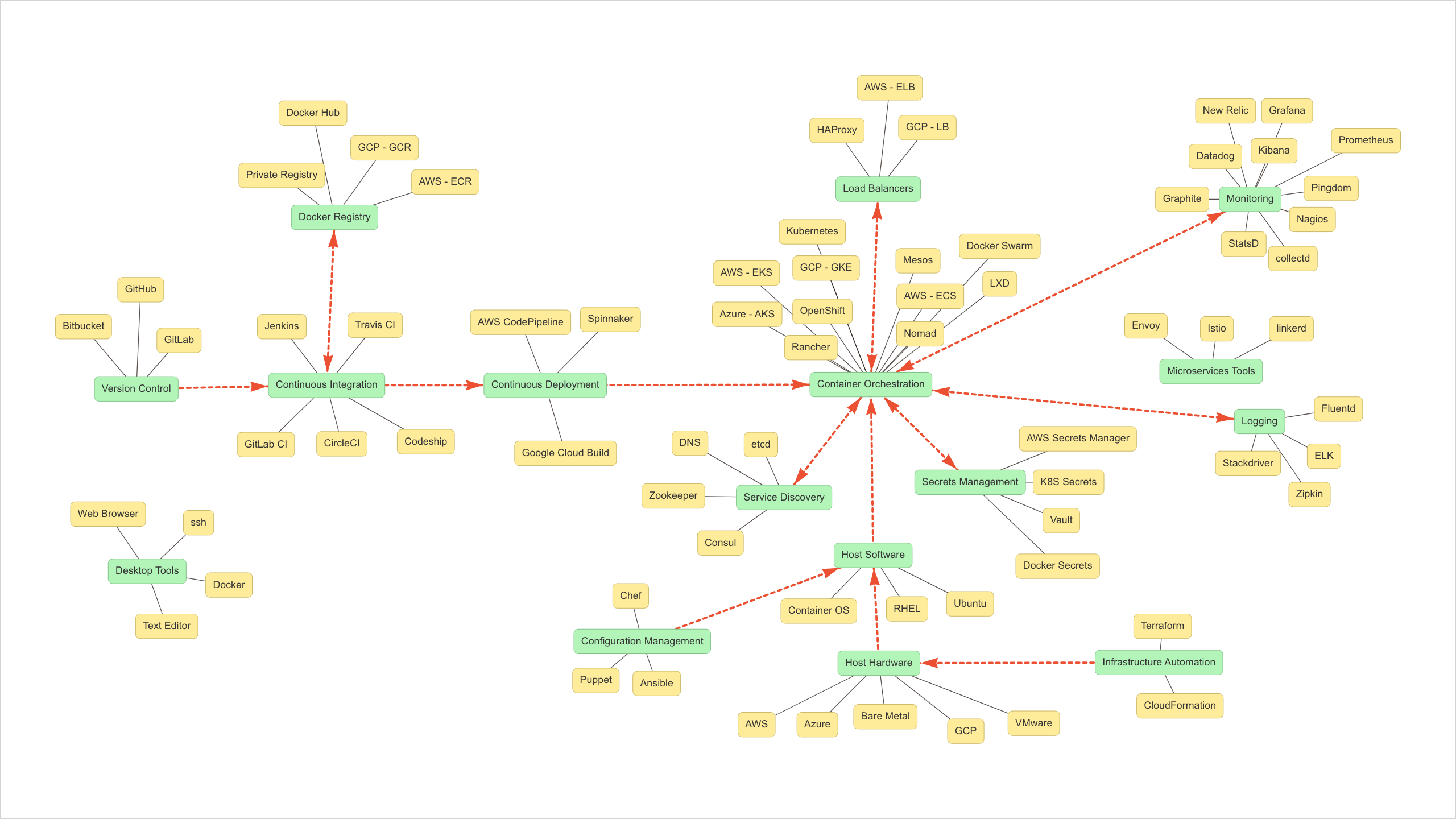

What you are looking at, is a map that highlights the major functional categories that exist in most container stacks (highlighted in green here). These include things like, version control, continuous integration, orchestration, logging, monitoring, etc. Then, revolving around all these major category areas, are popular tools that enable these types of wanted functionality or workflows. For example, Github is extremely popular for Version Control, Jenkins for Continuous Integration, or Kubernetes for Container Orchestration, you get the idea.

But, why create this map like this? Well, I wanted to give you a big picture view of what a complex system looks like, because individually these tools can be incredibly confusing for the uninitiated, as they often have strange names, and in isolation, their functionality often times make no sense.

To make my point. Let me run a couple phrases by you, that you might hear if you are reading up about running containers, or maybe watching a talk at a conference.

Here goes:

- We are using Zipkin for Distributed Tracing

- Oh yeah, we are using Zookeeper for Service Discovery

- Istio is acting as our Service Mesh

Hopefully you are starting to see what I am talking about. If you do not have the mental map of how things are connected, this just does not make sense without context. This is why I created the map and act sort of a tour guide as we walk through it. But, this is different from, say a vendor specific reference architecture diagram, as we are looking at it from a higher-level. My goal is to help you get a more general sense of how things work vs a very specific use-case.

So, we are going to walk through, step-by-step, not only what the major functional categories of tooling are, but more importantly, why people are using them!

Three Example Use Cases

To understand this better, maybe let’s back up for a second, as I wanted to focus on what is driving companies to choose this type of production stack. This will hopefully give some context into why we are doing what we are doing here.

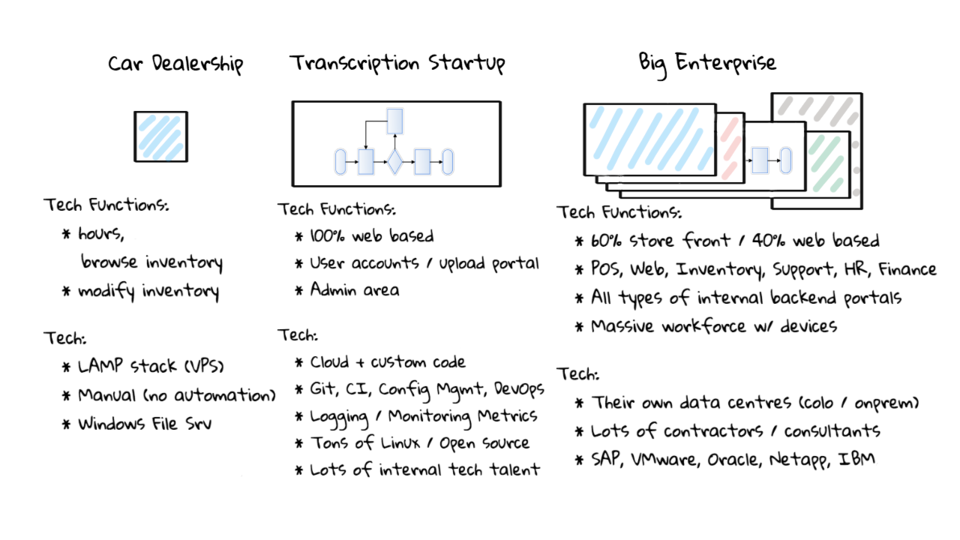

Here are three example companies.

First, a Small Business, that’s running a company website. For the sake of example, let’s say they are a car dealership, and they are hosting a fairly simple website with information about their business and car inventory. I guess we could say this is a very simple database driven web app. Probably using a Linux, Apache, MySQL, and PHP stack. Right now it is hosted on a cheap hosting provider.

Second, we have a Internet Startup. They are doing audio transcription. The idea is you can upload your audio files, they will process them, and return a text transcript. They are totally in the cloud, have lots of developers, and a small but powerful devops team. They are totally focused on staying alive as a company, keeping bills super low, and trying to out maneuver the competition.

Finally, we have a Mega Corp in the Media and Entertainment industry. They are not in the cloud, have their own data centers, and most of their developers and operations staff are contracted out. They have have tons of legacy application baggage, have acquired lots of company, and their infrastructure was sort of mashed together. It is a complete rats nest. They are paying hundreds of millions in ongoing software licensing fees each year.

As you can see, we quickly escalated the complexity here. But, the chances are, that you will find yourself somewhere on this spectrum of companies. This is why giving advice about container adoption is so hard. The needs of the example car dealership vs the mega corp are massive different. Advice you give to one would be crazy for the other.

Should you adopt a stack like this at your company? Honestly, it really depends on your current and future situation. In the car dealership area here, it would be total overkill. Sure, if might be cool to learn about these tools, you are likely way better off using something like a hosted container PaaS, maybe Heroku, or AppEngine.

What about the medium sized internet startup? Well, I would wonder how they are doing things today without containers? Maybe they are using something with AWS, have a pretty good system worked out with instance groups, and auto scalers. You’d really have to look and see what the pros and cons are. Are they really want to save money, they might be able to drive utilization up using containers, and break things down to get the most performance. It is highly dependant on their current situation.

What about the Mega Corp here. Often times, you wonder what the heck these companies actually look like on the inside. Well, they sort of look maybe the startups infrastructure, but in their own data centers, using technology from 10 years ago. Okay, maybe not that bad, but close. Plus, there is maybe 100 times more applications of all types. In my opinion, this is where containers can really save tens of millions a year, through consolidation of infrastructure, and getting rid of extremely expensive maintenance contracts. But again, it sort of takes the will power to see a big change like this through.

So, I guess the answer, to the question of, should you adopt containers, is it really depends. There are so many options to choose from, from a hosted PaaS, like Heroku, or AppEngine, all the way to a totally open-source system run in your data centers. You really need to do the research and figure it out.

Here’s some questions to think about, and have answers for, so you can choose a good option:

- Gather the basics about your current setup:

- What type of applications are you hosting today?

- What does your current production environments look like?

- Are there any issues / burning fires?

- Where is it hosted? (Colo, AWS, etc)

- Do you have existing Redhat contracts?

- How much traffic are you getting?

- How much are you paying today?

- Are you running Linux or Windows (both)?

More questions to think about:

- Does your team know what containers are? Do they need training?

- The pros should outweigh the cons

- Is the added overhead justifiable?

- Do you have staff on hand / consultants to do the work? (training?)

- Are you using containers?

- Can your apps be containerized?

- Do you have the source code if needed? (stateless vs stateful)

- Do you expect HA?

- What are your uptime requirements?

- What is the potential savings or performance improvements?

- Can you use a PaaS vs something more complex?

- Timelines?

- What’s the customer impact if something customer facing goes down? Do you host a shopping cart for Black Friday (for example)?

Why you might want to think about using containers:

- Increased developer velocity (lots more changes into production)

- Increased overall system uptime (self healing)

- Decrease the amount of Ops time (pretty easy to automate / scale)

- Potentially costs less (better use of system resources)

- Get rid of expensive service contracts

The answers to many of these questions will likely drive you down the path of specific options. For example, maybe you have a big Microsoft licensing deal at your company, maybe Azure is a good option? Or, maybe it was Redhat, and you should explore Openshift. Honestly, there are so many factors, that there is never a right answer without digging in and getting your hands dirty. Tha is what I wanted to focus on the functional categories that this type of stack offers, as these will likely exist, no matter the end platform your choose (or have chosen for you).

Functional Category Breakdown

Cool, lets just back to the containers in production map. I really want to stress that you should focus on these tool categories, as it does not really matter the platform you end up using, they will all likely have something that looks like this. This applies to PaaS type offings too.

Let’s start from the ground up here.

Host Hardware - Depending on the solution you choose, you will likely need to look after some type of hardware, this might be virtual machines backing your cluster, or some type of bare metal sitting in a data center. My personal preference is to put everything in the cloud, as you can really scale, and hardware issues are pretty much a non-issue, as you can just fire up new instances.

Infrastructure Automation - I recommend infrastructure automation tools, like terraform, because it comes in extremely handy for automating the setup cloud infrastructure pieces, in a repeatable manner. If your container platform does not already do this, and you are using something terraform supports, like AWS, or Google Cloud, I would recommend checking it out.

Host Software - the next level up here is the host operating system. If you are running things yourself, this will typically be some type of minimal Linux operating system, with just the essentials to get you going. This might be RHEL for OpenShift, or maybe Ubuntu, or a stripped down Container Optimized Image on Google Cloud. CoreOS lives in this area. Same with Rancher.

Configuration Management - Next, you will typically see some type of configuration management here, to make sure all your systems are similar. This really depends on the type of solution you choose, if is is something by a cloud provider, they will typically look after this type of stuff for you, or have scripts that execute at boot. But, the core idea is that you want all your systems to look the same, as they are essentially worker nodes used to run containers.

Container Orchestration - Okay, we are getting into the good stuff now. Container Orchestration, what does that even mean? Well, the general concept is that we have some containers, and we want to give them to a problem to run, and then forget about it. So, they are orchestrating the deployment of containers. You can see by the cluster of tools here, it is a pretty crowded space, every cloud provider has their own flavour of Kubernetes, AWS EKS, Azure ASK, GCP GKE, then you have things like OpenShift, and Docker Enterprise. So, no matter where you want to run this, you can likely do it. Things like Docker Swarm, Nomad, and Moses also live here. This is very much the heard of a container production system.

Service Discovery - Next, we have Service Discovery, this sounds sort of complex, but it is really a simple concept. We all understand DNS, you use sysadmincasts.com and got my IP address, that is how you are watching this video. That is Service Discovery. However, container systems often need something a little specialized, since you often need not only an IP address, but also a port name. Containers also make it extremely easy to scale up and down your application, so you need really tight replies with no caching. That is why these tools exist. I basically think of them like specialized DNS for containers.

Secrets Management - Another supporting service for containers is a category of tools called secrets management. Since you might have many copies of your application all running in containers, what happens if you need to change a password in one of them, or maybe update and SSL certificate? Well, if depends on the Orchestration tool you choose, but often times these containers can ask for the password, they might appear as tmp files on a special mount, or maybe an environment variable, the core concept here is that you typically do not want to bake any passwords or certificate files into your Docker images. You would use a Secrets Management service.

Now that you have a pretty high-level overview of the core platform for running a container. Lets, jump over to how you would actually deploy containers into something like this.

Desktop - On your desktop, you would use things like a browser, text editor, ssh, and git all the time to interact with tools in these various categories. Maybe you are checking in Dockerfiles, that build some Images, and they get pushed out for deployment. Or, maybe you are connecting directly to Jenkins, or your Logging, and Monitoring systems. For most of the infrastructure you see here, you typically configure it, and them forget about it. I put the desktop here since you will likely be interacting with all these systems.

Version Control - Next up, there is version control. I do not think this really needs and explanation. However, you will typically configure some type of notifications on code submission, called a webhook. This will be used to trigger or notify other systems automatically that they need to do something here. This is a great example of how Jenkins is often triggered.

Continuous Integration - Speaking of Jenkins. This is where Continuous Integration kicks in. Let’s back up for a second here. Say, you are a software developer, you are working on some code, on your desktop. You check that code into Version Control, that in turn kicks off a webhook to Jenkins. Jenkins, then checks out your code from Version Control, runs all your tests, builds the code, build a container image, pushed that to your Docker Registry. This is all sort of best case scenario stuff here. You’ would need to configure all this.

Docker Registry - Your Registry is used to hold your Docker Images. You can use Docker Hub, bug it highly depends on where you are running your container production setup. If you are using a cloud, most of them provide one, and it will likely be really fast since you are on their network. If you are running something like OpenShift, they have one too, so it really depends.

Continuous Delivery - So, we checked our code into version control, it was tested, compiled, and a Docker Images was build, and then pushed to the Docker Registry. This is where things get a little interesting. Many people will just Jenkins to fire off some type of automated deployment, or custom scripts, that will actually do something here. This is what Continuous Delivery typically means. Hey, I have new code and I want to deploy it, send it over to the container platform. There all types of fancy tools here but often simple scripts will get you by. So, that this point we have our example application sent over to the Container Orchestration category where it is now happily running in production.

Logging - This is where Logging really comes into play. Say, you have a container that has a high amount of traffic hitting it, so you scale it to 10 instances. Now you have basically 10 programs all streaming out logging information. This is where the Logging category of tools comes into play. Often times you can use something build into your cloud provider, or something like ELK, but this is a pretty critical category of tools for helping debug problems, or just verify things are working as you expect.

Monitoring - Next we have monitoring tools. This plays into the idea of making sure things are working as we expect. You might take those lots and run them through some type of system to make sure we do not exceed any rate limits or timeout limits or something. For me, the lines are often blurred between Logging and Monitoring tools, as they often are the same or work extremely closely to each other.

Load Balancing - Now that you have your application running, you will likely want to route traffic to it, so load balancers come in really handy. It seems each type of Container Orchestration tool, like to do something around this, the general idea is that you might have 10 containers all running your application, so you need a way to load balance the requests across those instances. Kubernetes does this, Docker Swarm does this, but if you are using a cloud provider, typically you would use their Load Balancers in front, and then route traffic in that way. This is more of a high-level that you will need to think about this, as it is pretty platform specific.

Microservice Tools - If what we have covered so far is not enough, there are all types of container and microservices tools that can be used in conjunction with these tools. For example, Istio can be used as a type of proxy, also called a service mesh, that basically wraps your application and can do encryption and smart request routing. I will actually be demoing this in an upcoming episode in a few weeks.

This is a quick guided tour of what the general categories are for a pretty well rounded production platform. You might notice something here, I never talked about Storage, that is because it will be highly dependant on your platform. You might be using NFS, Block Storage like AWS S3, or some type scratch space mounted in. Storage and containers can be challenging given the transient nature of them and requires some planning and lots of testing to make sure it works like you expect it to.

This might look complex, but most Cloud Providers have offering where you can get this up and running in a matter of minutes to hours. The cool thing about a setup like this, is that once you have it up and running, it is mostly automated. Any, future projects can just take advantage of this new platform for free. You will hit a threshold where this make sense. It is sort of the economies of scale for application hosting. If you are just running one really simple application maybe all of this infrastructure does not make sense. But, if you have multiple applications, all of the sudden this is more of a platform and it starts to make much more sense, in fact, you would almost be crazy not to use it.

Final Thoughts

Okay, well hopefully you found this at least somewhat useful. I can pretty much guarantee you, that anyone who is running containers in production at a larger scale, has something that looks very similar to this. At the very lease, their platform will have these functional areas. So, you have some common ground for understanding what they are doing. This map is available on my website via the top navigation bar.

Alright, that is it for this episode, thanks for watching. I will see you next week. Bye